HELP! My Hair Is On Fire And My Database Is Using 90% Memory

One of the more common inquiries we encounter is a question about memory and/or swap utilization for database instances. Often this comes with a request to assist in upgrading to a larger database instance. Many times the upgrade isn’t actually necessary and we take some time to share how databases such as MySQL and Postgres leverage memory. In light of how often we answer this question it seemed like a fitting topic to share more about on our blog.

The rule of thumb is that disk is about 1/10th the speed of system memory. While rule can vary slightly depending on the hardware, the bottom line is that disk is nowhere near as fast as memory. As a result, an efficient deployment of MySQL or Postgres will be configured such that the database will use as much memory as possible without leaving the system in a state where it might become unstable or swap regularly.

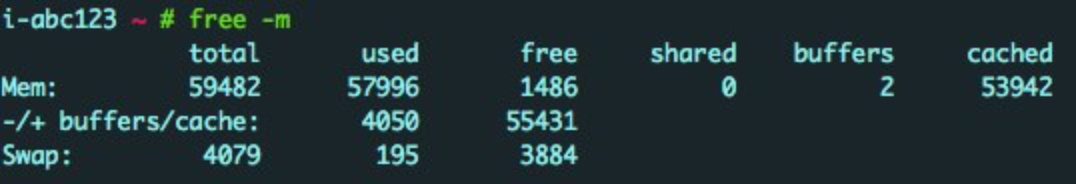

Postgres relies on the file system cache for maintaining data and indexes in memory. Postgres also leverages a large shared buffer (~25% of available memory) as well as several small system and per-connection buffers. When looking at the output of the command free -m you might see something like the following:

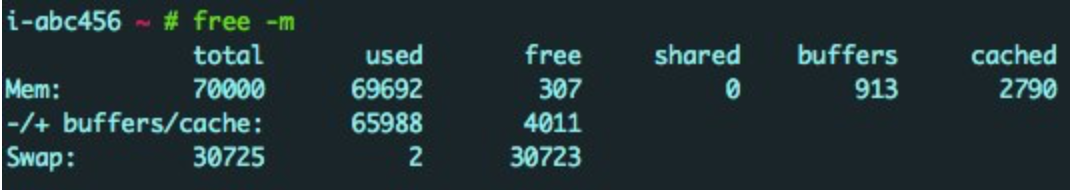

MySQL is tuned by default for the (transactional) InnoDB engine; the standard for many modern development frameworks. The InnoDB Buffer Pool maintains InnoDB data and indexes in memory so is tuned to use 70% to 80% of available system memory for this purpose (depending on instance size). In addition to that MySQL will allocate a certain amount of memory for several other system and per-connection buffers. Since MySQL uses its own buffer for InnoDB, the memory utilization pattern looks quite a bit different:

MySQL is tuned by default for the (transactional) InnoDB engine; the standard for many modern development frameworks. The InnoDB Buffer Pool maintains InnoDB data and indexes in memory so is tuned to use 70% to 80% of available system memory for this purpose (depending on instance size). In addition to that MySQL will allocate a certain amount of memory for several other system and per-connection buffers. Since MySQL uses its own buffer for InnoDB, the memory utilization pattern looks quite a bit different:

For both databases it is not uncommon or problematic to see memory utilization at or even above 95%. Typically this memory usage level is not a big concern unless you encounter Swap.

For both databases it is not uncommon or problematic to see memory utilization at or even above 95%. Typically this memory usage level is not a big concern unless you encounter Swap.

What about Swap

Swap, also referred to as ‘paging’, is a disk based extension of a computer’s physical memory. As data is loaded into memory the OS kernel monitors the memory usage and occasionally pushes some less frequently used pages out to swap to ensure that there is memory readily available when new data needs to be added. While this space is setup in a way that makes it more efficient to access than general disk is still based on disk; therefore, considerably slower than memory. (More on Swap.)

There are basically two schools of thought on swap allocation for servers. The first is that swap should be allocated on a descending scale starting at 2x system memory and declining to 0.75x system memory over a certain threshold. The second is that you should tightly control your system, codebase, and configuration and not allocate any swap space to absolutely prevent any swap activity. While both arguments hold merit; we operate under the first of these since our platform needs to support the widest possible use case.

The scale we use for swap allocation looks like this:

System Memory Swap Allocation

__< 2GB Memory x 2

2GB - 8GB Memory x 1.5

> 8GB* Memory x 0.75

- generally maximum swap size is capped at 8GB.

All of our instances have monitoring and alerting tracking both the amount of swap in use, as well as the swap read and write rates. The absence of these alerts doesn’t mean swap isn’t impacting your application, so it is still important to consider your performance graphs, and even system level statistics when troubleshooting performance issues.

When we encounter any swap alerts the first thing we look for is the setting of vm.swappines in the OS (sysctl -a |grep swap). This setting controls how likely the kernel is to push pages out to swap vs simply dropping them from the cache. The default for our AMI is generalized at 60 but for dedicated databases we set this to 15. If your database is on Cloud and uses a setting of 60 still, you may want to review the environment updates available for your deployment to see if that update is still pending.

After the “swappiness” level has been confirmed we’ll look for several other factors:

- is the host responsible for any kind of backup operations

- are there heavy queries that may be making use of large temporary tables

- are there other processes running on the system that may be using memory space that the database "expects" will be available to it

Solo instances and Swap

A Standalone or Solo instance is a special animal. These instances are not recommended for Production use and, by design, split the available system memory 50/50 between the application and the database. The resource demands for application and database services is very different and it is not uncommon for the application services on these hosts (including background tasks) to consume more than that 50% of memory. The typical small size (usually a small or medium instance) also means that these can go from good, to bad, to failed very quickly.

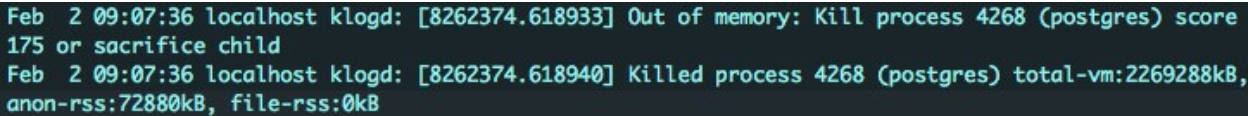

Monitoring is the same for these instances however, their limited memory and swap space can be filled very quickly leaving little time for a warning to be addressed. The most common failure to encounter is that the OS runs out of both memory and swap and then has to run a process called the ‘OOM Killer’ (Out Of Memory Process Killer). This system task analyzes the processes that are utilizing memory and gives each one a score. Due to the amount of memory typically used by MySQL or Postgres they often end up having the highest score and get terminated as a result. Typically a message like the following will be found in the syslog (/var/log):

To recover from this type of error you can typically use the following sequence of commands to restart the database:

To recover from this type of error you can typically use the following sequence of commands to restart the database:

- /etc/init.d/mysql stop

- /etc/init.d/mysql zap

- /etc/init.d/mysql start

- In the case of Postgres this is normally only required if the postmaster process is terminated. For this case use /etc/init.d/postgresql-{major_version} with each of the stop, zap, and start options.

In order to prevent these failures:

- Consider migrating to clustered instances which dedicate the resources separately to application and database instances. This is generally the best way to avoid these types of failures for both Postgres and MySQL.

- Review the configuration of your Application workers to utilize fewer resources. Reducing the number of workers or the amount of memory per worker is a common way of addressing this.

- For MySQL you can consider allocating a smaller InnoDB Buffer Pool. Results may vary with this approach since this does require a database restart. The reduced data cached in memory may also have a negative impact on performance.

- Evaluate using an instance with a larger amount of memory. Typically this will be a last resort since a larger instance means more workers configured by default and may just result in the same issue on a larger instance.

The moral of the story is that high memory usage on databases is normal and doesn’t by itself indicate that the database is in need of additional resources. Understanding how your database is using memory, and the interactions that can occur between your application and data resources can help you to avoid costly and often unnecessary upgrades. When in doubt, please put out your hair and file a ticket with our Data Support Engineers; we’d be happy to review your usage and make recommendations for your deployment.

Share your thoughts with @engineyard on Twitter