Autoscalers the Hard Way

If you’ve been following along on the Deis blog, they published a post showing you how to build a custom Kubernetes scheduler with Elixir.

That post was inspired by a recent talk by Kelsey Hightower.

Kelsey also tweeted:

It's not that everyone should go out and build custom schedulers, but they should know how if they need too.

— Kelsey Hightower (@kelseyhightower) June 8, 2016

I would take this logic and apply it to autoscalers too.

While everyone doesn’t need to go and build their own autoscaler, it can be beneficial to understand how they work. And one of the best ways to gain that experience is by trying your hand at writing your own.

At its core, an autoscaler is a self-correcting autonomous feedback system.

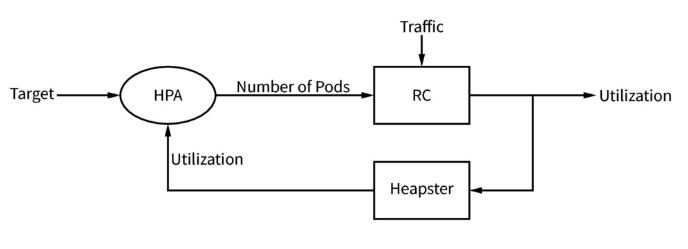

In my DevOpsDays talk in Minneapolis, I mentioned that any autoscaler will have the following architecture:

Borrowing from control theory, an autoscaler can be distilled into:

- Plant: the system we are trying to modify. In Kubernetes, this is the replication controler or deployment resource that we scale-out to be able to serve the traffic from our application.

- Sensor: queries the plant to relay information to the controller needed to self-correct the entire system. In Kubernetes, this is the Heapster API that gets the CPU utilization of our pods and containers.

-

Controller: this is the autoscaler. It is configured with a target. The target value is then compared to the output of the system. Depending on the difference, corrections are made to the plant to regulate the behavior.

The built-in Kubernetes Horizontal Pod Autoscaler (HPA) is configured with the target CPU utilization of our application. It then gets reports from the Heapster API to get the actual CPU utilization.

After comparing the actual and target CPU utilization, the HPA will then scale out or scale in the pods via our application replication controller.

Here’s a simple control-loop implementation of the autoscaler described above:

while true do

output = sensor.utilization

instances = controller.work output

actuator.scale instances

now = Time.now.to_i

puts "demo.replication_controller.replicas #{instances} #{now}"

puts "demo.pods.cpu_utilization #{output} #{now}"

sleep 5

end

I’ve put a full implementation of this simple autoscaler on GitHub if you want to play around with it. And if you want to know more about buliding autoscalers (with the help of math) check out the recording of my DevOpsDays Minneapolis talk.

Share your thoughts with @engineyard on Twitter

OR

Talk about it on reddit